ANNOUNCING OPENFLAMINGO: AN OPEN-SOURCE FRAMEWORK FOR TRAINING VISION-LANGUAGE MODELS WITH IN-CONTEXT LEARNING

by: Anas Awadalla and Irena Gao, 28 Mar, 2023

Overview. We are thrilled to announce the release of OpenFlamingo, an open-source reproduction of DeepMind's Flamingo model. At its core, OpenFlamingo is a framework that enables training and evaluation of large multimodal models (LMMs). Check out our GitHub repository and demo to get started!

For this first release, our contributions are as follows:

- 🏋️ A Python framework to train Flamingo-style LMMs (based on Lucidrains' flamingo implementation and David Hansmair's flamingo-mini repository).

- 🪅 A large-scale multimodal dataset with interleaved image and text sequences.

- 🧪 An in-context learning evaluation benchmark for vision-language tasks.

- 🤖 A first version of our OpenFlamingo-9B model based on LLaMA, with much better models to come!

The recent progress in open-source LMMs with the release of BLIP-2 and FROMAGe has shown the exciting potential of multimodal systems. We hope that OpenFlamingo will help drive progress in multimodal machine learning, and we have more exciting contributions in the pipeline, so stay tuned!

Goal. Our goal with OpenFlamingo is to develop a multimodal system that can tackle a diverse range of vision-language tasks. Ultimately, we aim to match the power and versatility of GPT-4 in handling visual and text input. To achieve this goal, we are creating an open-source version of DeepMind's Flamingo model, a LMM capable of processing and reasoning about images, videos, and text. We are committed to build fully open-source models, and believe this transparency is essential for fostering collaboration, accelerating progress, and democratizing access to state-of-the-art LMMs. Our release is the first step towards this goal.

We are sharing the first checkpoint of our OpenFlamingo-9B model. While the model is not yet fully optimized, it demonstrates the potential of this project. By working together and receiving feedback from the community, we can train better LMMs. We encourage the community to participate in the development process by providing feedback and contributing to the repository.

Technical Details. Our implementation largely follows that of Flamingo. Flamingo models are trained on large-scale web corpora containing interleaved text and images, which is crucial for endowing them with in-context few-shot learning capabilities. OpenFlamingo implements the same architecture (Perceiver resamplers, cross-attention layers) proposed in the original Flamingo paper. However, since the training data for Flamingo is not available to the public, we use open-source datasets for training our models. Specifically, the released OpenFlamingo-9B checkpoint is trained on 5M samples from our new Multimodal C4 dataset and 10M samples from LAION-2B.

Multimodal C4

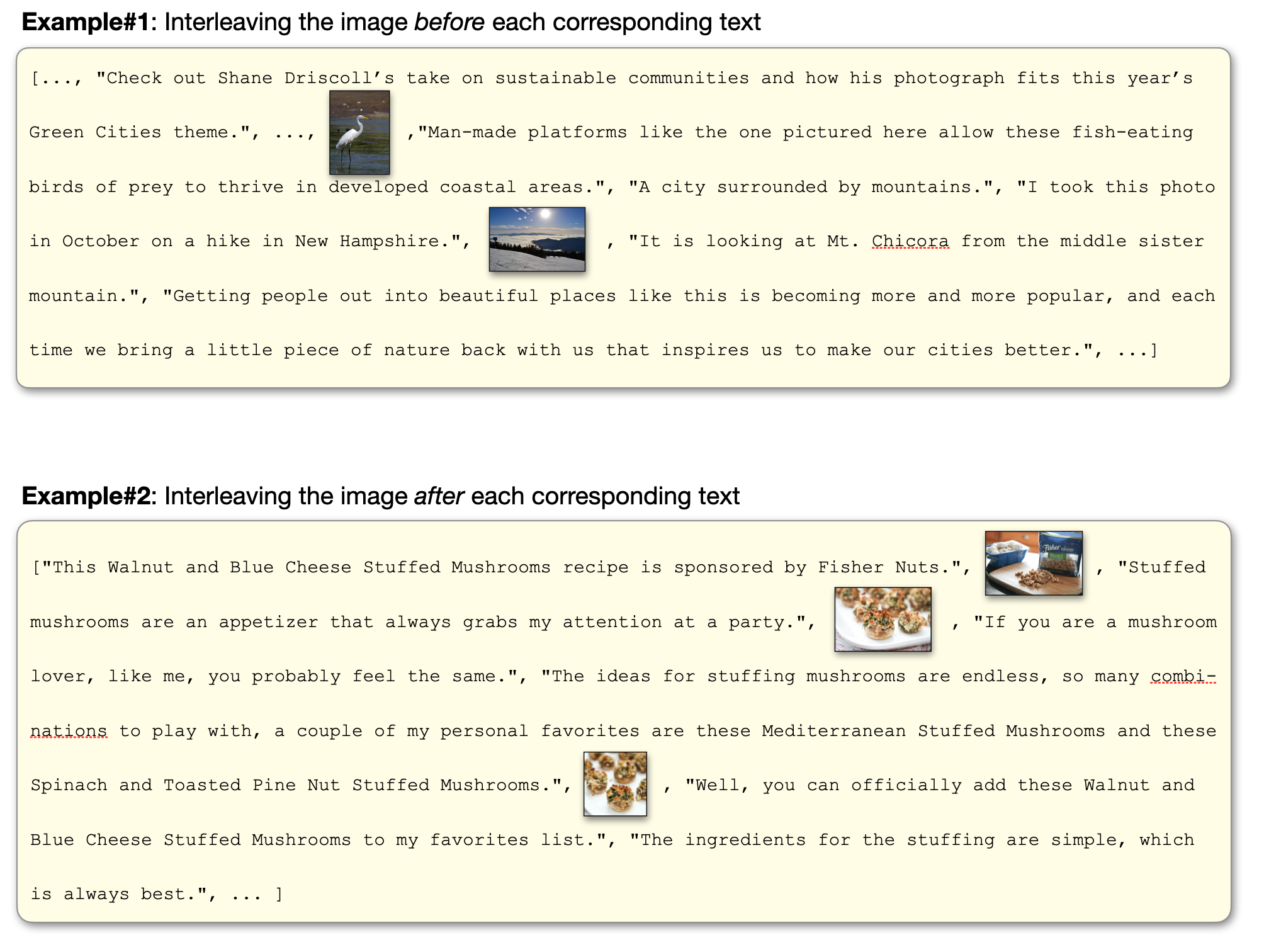

The Multimodal-C4 dataset is an expansion of the text-only C4 dataset, which was used to train T5 models. This dataset is built by our collaborators Jack Hessel and Wanrong Zhu at the Allen Institute for AI. For each document in the C4 en.clean dataset, we retrieve the original webpage from Common Crawl, then collect the downloadable images. Data cleaning is carried out through deduplication and content filtering, which aims to eliminate non-safe for work (NSFW) and unrelated images, such as advertisements. Additionally, we run face detection and discard images with positive identifications. Finally, images and sentences are interleaved using bipartite matching within a document: CLIP ViT/L-14 image-text similarities serve as edge weights. Multimodal-C4 consists of approximately 75 million documents, encompassing around 400M images and 38B tokens. A full release with more detail is coming soon.

Benchmark

To measure the performance of OpenFlamingo, we evaluate on a diverse set of downstream tasks. Our aim is to eventually build an open-source version of Flamingo’s benchmark and extend past that to standardize vision-language task evaluation. Currently we support visual question-answering (VQAv2, OK-VQA), captioning (COCO, Flickr30k), and image classification (ImageNet) tasks. Expect us to add many more evaluation sets that probe model reasoning, biases, and more! You can access the benchmark on the OpenFlamingo repo.

Model release

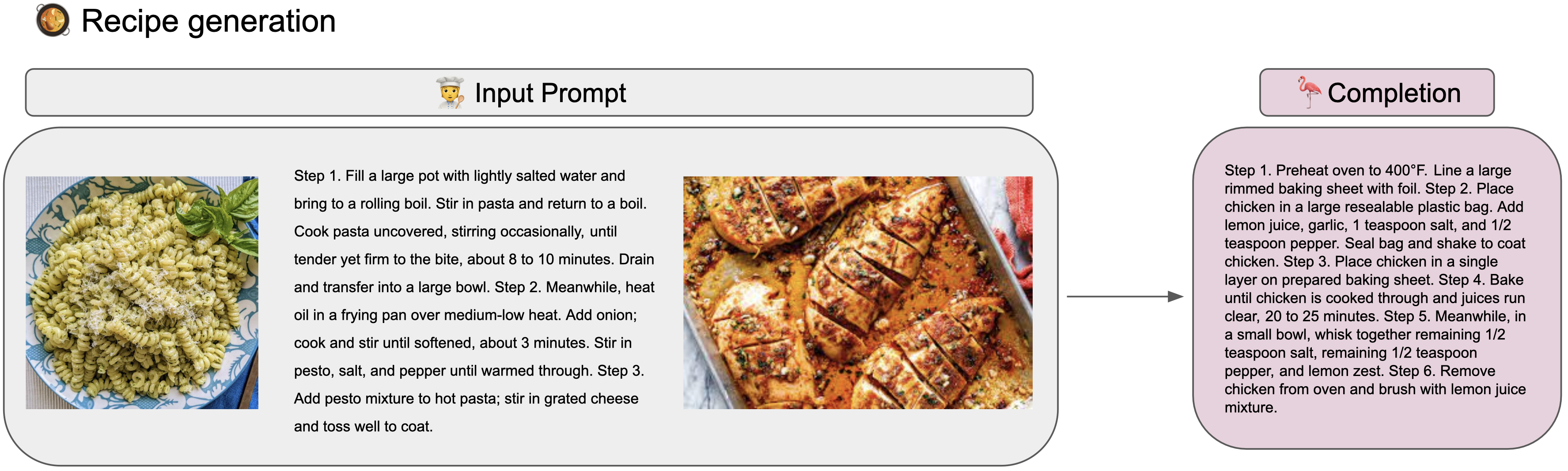

As part of our release, we are also providing a checkpoint from our under-development OpenFlamingo-9B, a LMM built on top of LLaMA 7B and CLIP ViT/L-14. This model is still a work in progress but it can already bring a lot of value to the community. For instance,

Performance

We evaluated our checkpoint on COCO and VQAv2. Here we report the validation performance using a different number of shots.

COCO (CIDEr)

| 0-shot | 4-shot | 8-shot | 16-shot | 32-shot | |

| OpenFlamingo-9B* | 65.5 | 74.3 | 79.3 | 81.8 | 84.5 |

| DeepMind Flamingo-9B | 79.4 | 93.1 | 99.0 | 102.2 | 106.3 |

VQAv2 (VQA accuracy)

| 0-shot | 4-shot | 8-shot | 16-shot | 32-shot | |

| OpenFlamingo-9B* | 43.5 | 44.0 | 47.5 | 48.9 | 50.3 |

| DeepMind Flamingo-9B | 51.8 | 56.3 | 58.0 | 59.4 | 60.4 |

*Note that we report validation performance (using the same setup outlined in Flamingo paper) for OpenFlamingo-9B while DeepMind Flamingo-9B performance is on test data.

Safety and ethical considerations

As OpenFlamingo-9B is built on top of frozen LLaMA and CLIP models, you can expect OpenFlamingo to inherit the harms of the parent models. We understand that by releasing these models, they may be used in harmful ways. However, it is important for the research community to study the harms of large multimodal models, and we believe that open-sourcing these models will enable the community to develop better ways to mitigate these harms in future models.

We emphasize that OpenFlamingo-9B is a research artifact and not a finished product. It can produce unintended, inappropriate, offensive, and/or inaccurate results. We thus advocate for caution and thorough evaluations before using our models in any real applications.

Contributions

Thanks to:

- Josh Gardner and Yonatan Bitton for implementing the evaluation benchmark.

- Kalyani Marathe for implementing the data pipeline and improving code quality.

- Yusuf Hanafy for working on the demo.

- Wanrong Zhu, Jack Hessel, and Samir Gadre for building the Multimodal C4 dataset.

- Jenia Jitsev for helping us with large scale training.

- Mitchell Wortsman, Gabriel Ilharco, Simon Kornblith, Pang Wei Koh for technical discussions and for feedback on this blog.

- Ludwig Schmidt for being our main advisor on this project and for their support.

Acknowledgements

This code is based on Lucidrains' flamingo implementation and David Hansmair's flamingo-mini repo. Thank you for making your code public! We also thank the OpenCLIP team as we use their data loading code and take inspiration from their library design.

We would like to thank Jean-Baptiste Alayrac and Antoine Miech for their advice, Rohan Taori, Nicholas Schiefer, Deep Ganguli, Thomas Liao, Tatsunori Hashimoto, and Nicholas Carlini for their help with assessing the safety risks of our release. This research is supported in part by NSF Institute on the Foundations of Machine Learning (IFML). Thanks to Stability AI for providing us with compute resources to train these models!